Copyright (probably) won't save anyone from AI

There is lots of justified outrage at the way AI companies have "stolen" millions of creative works to train their models. But there's every chance it's legal.

There are many reasons to be worried about AI. We’ve been worrying about it since long before it was anywhere near a reality – the novel Erewhon by Samuel Butler was written in 1872 and imagined a society that had abandoned all of its advanced machinery, long before computers were a reality.1 Terminator and Skynet beat ChatGPT by decades.

Most of our concerns now AI seems imminent are more mundane: will it take our jobs, will it be fair, will it require so much power it makes climate change worse, and the like.

But for journalists, artists and musicians there is an even more immediate concern: is it ripping off my work as it replaces me? Modern large language models like Gemini, ChatGPT, Perplexity and Claude all require huge amounts of data to train. Much of this has been done using copyrighted material, often without seeking permission in advance. OpenAI has said it would be “impossible” to train AI without such data.

AI has ingested hundreds of thousands of books – often without their authors’ knowledge or permission – as well as millions of songs, and video. A generative model cannot make a medium without having ingested it: if a system can output images, it was trained on images. The same is true for music and for videos.

This has provoked understandable fury – and lawsuits. Most of the American music industry is currently suing music AI generation startup Suno. The New York Times Company is suing OpenAI. Other lawsuits are ongoing and more are surely coming.

On the surface, the injustice is clear: AI companies have taken work and used it to train models that are now immensely valuable: OpenAI alone is currently valued at $157 billion. OpenAI admits that the copyright data used to train the models was essential for their creation. That looks like straightforward theft of value – a multi-billion dollar fortune built on the creative labour of others.

Making it worse is that AI seeks to replace creative labour for many tasks, while Google and other tech companies are essentially trying to replace journalistic work, too. Increasingly, instead of directing users to a website that can answer their question, Google is trying to take information from the web and present it directly to the user – getting rid of the chance for the source of that information to monetise it, either through advertising or subscriptions.

Creative industries and the news media see an existential threat from AI, and they know it was built and trained on their work – and continues to rely on that work. Understandably, they’re turning to the laws that are supposed to protect that work, and assuming that the AI companies must have breached them in some way.

The reality is far messier. Laws can change, and anyone trying to guess what US courts in particular will do in the second Trump era is on to a loser – but copyright law doesn’t work like most of us think it does. There is every chance that the way AI companies have used everyone else’s work could be found to be perfectly legal.

Here’s why copyright (probably) won’t save you.

A very brief history of copyright

People in power have wanted to control information for as long as either have existed. In the case of written works, this was relatively easy for a long time as (1) almost no-one could read, and (2) books were painstakingly produced in a drawn-out manual process, making it trivial to track down who had written what – and limiting its circulation if its contents were not deemed suitable.

Johannes Gutenberg ‘ruined’ all that stability in the 15th century by inventing movable type, a system that made it possible to (relatively) cheaply produce many copies of the same book, much more quickly than before. Suddenly, it was easier to spread ideas through books, hide who was behind a particular printed text, and get it into lots of people’s hands quickly.

Inevitably, those in charge decided that not just anyone could be trusted with that power. The first known formal privilege – granting a monopoly on printing to its beneficiary – dates back to Venice in 1469. If you wanted something printed in Venice, you’d have to go to Johannes de Spira. And if the authorities had a problem with something printed, they knew who to contact.

The English crown took this idea further from the 16th century onwards, granting patents or licences to particular printers for them to print certain works. Censorship was very much the run of the day at that time, and texts were submitted in advance to the Crown, which would then grant or deny permission (or a ‘right to copy’, if you will) to print it.

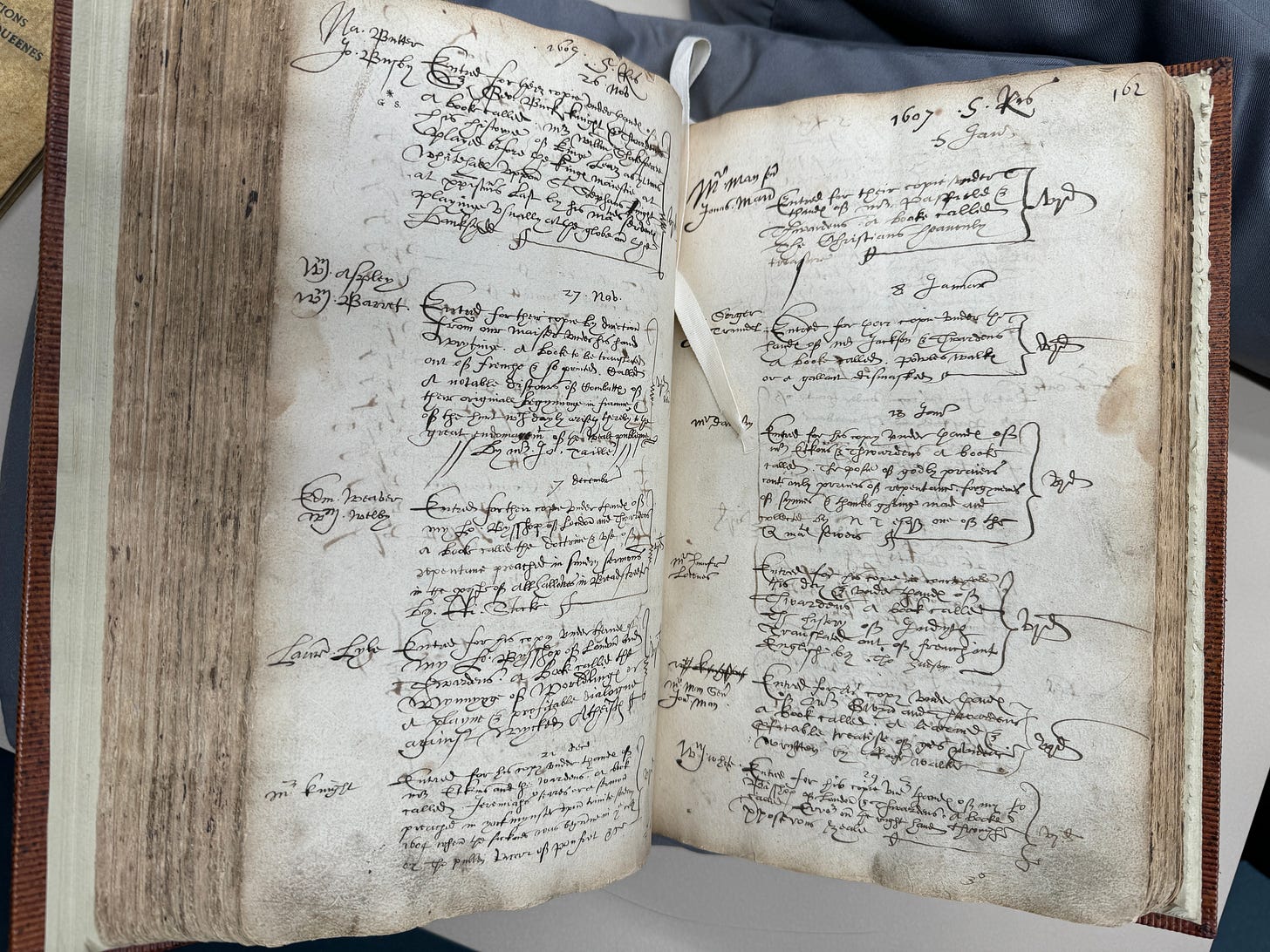

The Stationers’ Company of London (also known as “The Worshipful Company of Stationers and Newspaper Makers”) still has the record of these copyrights – the right, issued by the crown, to produce copies of a particular text2 – and will, if you ask very nicely, show you some of the old ones, like this:

If you’re very good at reading handwriting – and can zoom in enough – you’ll see that the top-left entry of these pages is for King Lear, but it makes no mention of Shakespeare. Instead, the entry is in the name of the printer of the text, who was the holder of the right to print, and as such also the person who would be held accountable for the text.

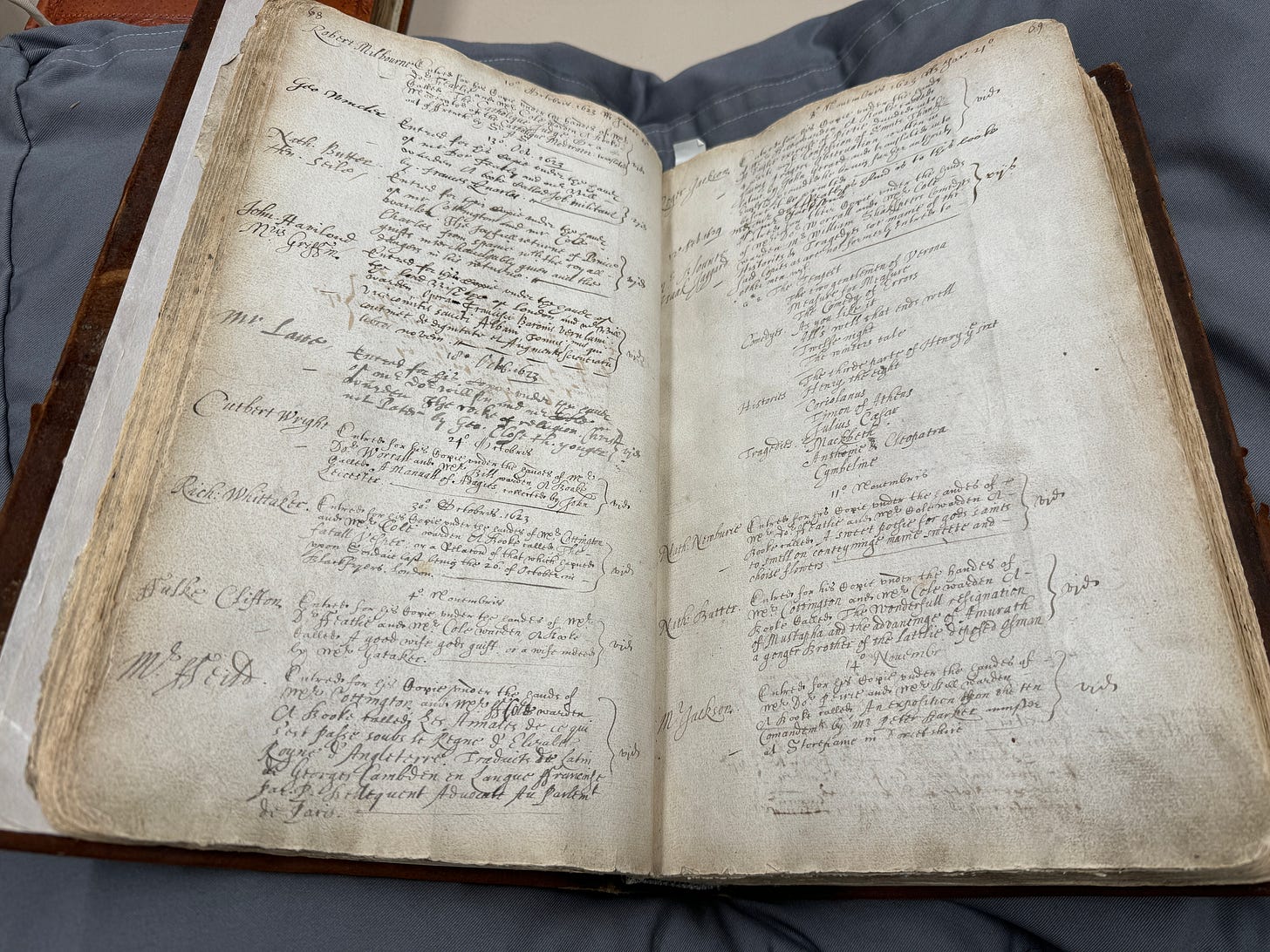

Authors were generally not mentioned at all in these entries, or if they were they were included as a detail, almost an afterthought. The next image shows the entry for one of the first collected folios of Shakespeare’s works (it is the bulleted list on the right-hand page). The copyright still belongs to the printer, but Shakespeare is mentioned as it is, after all, a collection of his plays.

Demonstrating history doesn’t repeat itself, but certainly rhymes,3 there’s certainly something familiar happening here: a new technology has come along, in the form of printing, and has changed how the creative industries – such as they were – operate.

Authors of books and plays saw rights based on their work accruing to printers, some of whom became very rich, and started to wonder if this new system was fair. Over time, authors agitated for recognition within the system, and eventually secured it – the Statute of Anne 1710 is the first copyright legislation in the world. It granted an automatic monopoly on a written work for 14 years after its first publication. Crucially, that monopoly was granted to the author, not the printer. This was the birth of the somewhat romantic, creator-centred copyright that exists today.

This author-centric version of copyright was cemented in international law in the 19th century through the Berne Convention, in no small part thanks to Victor Hugo, who enjoyed immense political influence in France at that time, and who used this to secure international agreement on countries respecting one another’s copyright laws, and on centring the French conception of the “moral right” of the author to be equally recognised.

International copyright retains that dual role: on the one hand it is a practical matter of property rights and recognition, expanded from the text of books to the script of movies and TV, the lyrics of songs, and to other creative endeavours. On the other, it retains the more ambiguous moral right for authors to be recognised as the creators of their works.

What copyright does – and what it doesn’t

If we take anything from that extremely truncated history, it’s that the history of copyright is in printing, and it comes from creative expression – who has the right to reproduce a particular finished work, be it a book, a play, a song, or similar. That’s how modern copyright functions: it’s about the particular expression of an idea, or a particular set of words in a certain order.

That means if I were going to take the Twilight book, remove Stephenie Meyer’s name, add my own and start selling it, I’m going to get in trouble. I’m not just ripping off the text of her book, but I’ve also interfered with her moral right to be identified as the author of it.

Meyer’s actual words are protected, and I wouldn’t need to come close to copying her entire book before I got myself into trouble: the European Court of Justice has found that taking a snippet as short as eleven words (without a relevant “fair use” type of exemption) can breach copyright.

However, nothing would stop me taking the ideas of Twilight and using them to make a book of my own. I could write a clunky coming-of-age novels using vampires as an unsubtle analogy for sexual awakening and male predation, drawing upon numerous other common genre tropes.

This gets exploited commercially: famously, Fifty Shades Of Grey started out as an adult fanfic of Twilight, being modified into a real-world setting partly to make sure it was creatively distinct enough from its source material so as not to trigger legal issues. If copyright prevented reusing tropes and ideas, creative work would grind to a total halt.

This is why professionals in the creative industry tend to roll their eyes when people – usually newcomers – fret about “stealing ideas”. You can’t steal an idea because they can’t be protected, and they’re generally not that important in the first place. There are only a limited number of plots, tropes and settings: the magic is almost never in the originality of the idea. Instead, it’s in the skill of the execution.

This gets particularly tricky for the news industry, though, as copyright is the law upon which news media relies for protection. It’s a distinctly imperfect tool for that purpose, because the same principle applies – the writing and the expression is protected, but the ideas in the news story or feature are not.

In fiction, the “ideas” that make up a narrative are the ones you made up. In a news story, they’re the facts upon which it’s based – which might have taken weeks or months of investigative reporting.

There is nothing stopping anyone from taking the facts from a news story and retelling them: they have no legal protection whatsoever. It is an industry norm to credit another outlet when picking up information from their news story, but provided their actual language isn’t taken, this is about norms, not law.

Some newspaper writers produce beautiful prose that is a large part of what attracts an audience to their work – but many of us aren’t hired mainly for our prose. Copyright has always had a somewhat awkward fit with news and current affairs. The AI era is making that more awkward.

So why does this make it okay for AI to steal my work?

Pulling this together, think about the last time you wrote an article, an essay, or (if you’ve done it) a book. You likely deliberately read multiple books or articles to learn the background of the issue you’re writing about. If you directly quoted any of those, you probably cited them – but if they were just background, you likely didn’t.

More than that, though, think about the books you read to get to the point of writing the essay/article/whatever in the first place – you likely couldn’t list those even if you wanted to.

A truly comprehensive bibliography of any written work would include pretty much everything you ever read – not least because it taught you how to read, influenced why you might choose one word over another, taught you to avoid a particular cliché or logical fallacy, and influenced millions of other tiny decisions.

AI doesn’t ‘learn’ like we do: however clever the output might look, AI is still essentially just “spicy autocomplete”. But its training process ends up working a bit like our learning: it has picked up how language works, as well as its simulacrum of ‘knowledge’ from everything it has ‘read’.

It is essentially producing new writing from everything it has digested, just like a human writer does. Similarly, a musician will be influenced by every piece of music they have listened to – and no-one suggests this means they owe royalties to the artists behind every song they ever heard. If they use a riff or sample one of those songs, that’s different (whether they’re human or AI) and will require credit and royalty payments, but that’s a whole separate issue.

Sometimes, AIs output chunks of text that are just reproducing copyright material upon which they were trained. These are simple – everyone agrees these violate copyright, and if they’re too common, these will result in lost cases and payouts.

But neither the media nor big tech thinks these are what their argument centres upon – it will be relatively easy to minimise this kind of obvious copyright violation. The NYT included these in their lawsuit because they generate good headlines and are an obviously winnable part of the argument. They are not core to the case.

Instead, the media is trying to argue that AIs shouldn’t be able to ingest their copyrighted material even if what it outputs doesn’t violate copyright. That’s a more difficult case to make: it is essentially asking the courts to create a new threshold, allowing behaviour from humans but not if an automated system is doing it. That could be harder than it first looks.

But when I research an article, I DON’T DOWNLOAD AND COPY MILLIONS OF DOCUMENTS AT ONCE

An AI ‘learning’ by ingesting copyrighted material feels like an injustice in a way that a human doing the same does not. Part of this is just normative: humans and AIs are different. Part of it is about the amount of money at stake, and the threat to the existing industries. But part of it is about scale: no human writer uses copyright materials in anything like the volumes of modern AI systems.

That might tempt people to think that this is why the copyright argument is winnable: if AI companies are making copies of all of this copyrighted work to power their models, surely that copy breaches copyright, even if it isn’t published to the public? This definitely feels like it’s an argument on surer footing.

However, it’s not without its problems. The first is that AI models don’t use their training data in the way many of us might imagine. If we’ve thought about how something like ChatGPT answers our questions, we might imagine that it takes our questions and looks it up against a database containing all of its training data – like we might look up a record in an archive, or a book in a library.

In reality, ChatGPT and its rivals don’t actually store their training data, let alone run queries against it. Instead, the data is used to create ‘weightings’ which influence how it responds to different prompts, and then it is discarded. There is no permanent copy of the training data packaged alongside commercial AI models – by the time the model is launched, the training data is surplus to requirements.

That doesn’t change the fact that the AI companies have created a temporary copy of all of their training data – including huge quantities of copyright material – while they made their model. A temporary copy is still a copy, isn’t it?

European, British and US courts have all considered this specific issue before. The US Supreme Court considered the question in Authors Guild v Google, a case which centred around a not-for-profit effort undertook by Google to make printed books searchable.

Google was digitising the books and producing a full digital copy, which was used to then search and display snippets. Google won the case, because it was not creating a competing product to the books, it was limiting how much copyrighted material it reproduced, and it wasn’t profiting off the copyrighted work (though the court stressed that profit wouldn’t necessarily have led to a different result).

But the relevant part of the judgment for our purposes here is this:

“We concluded that both the making of the digital copies and the use of those copies to offer the search tool were fair uses … such copying was essential to permit searchers to identify and locate the books in which words or phrases of interest to them appeared.”

Which is then followed up with this:

Complete unchanged copying has repeatedly been found justified as fair use when the copying was reasonably appropriate to achieve the copier’s transformative purpose and was done in such a manner that it did not offer a competing substitute for the original.

Essentially, both sides could point to these paragraphs as helping them out: making a complete copy of a copyrighted work can be allowed under fair use if the work is transformative – but if the new work would be a competitor to the work it used, that might change the court’s calculus.

The media will, of course, argue that an answer from an AI model is a competitor to reading a news website. AI companies will argue first that their service is not a competitor to news sites, but as a fallback they can also argue that copyright protects creative endeavour, and so if the competition is for finding out the facts, rather than the writing, this should not necessarily be protected.

UK and European precedents are less direct, but do allow for “temporary acts of reproduction” of copyrighted works in their entirety where that reproduction is essential for the provision of a lawful use of that work. This has generally been considered in the context of internet service providers and similar services.

These temporary troves of copyrighted materials are the weakest point of the AI companies’ argument, and where the media companies have the best chance of success under existing copyright law. But they are by no means a silver bullet.

So why did the AI companies try to hide what they did?

If AI companies were absolutely certain about the legal basis of what they were doing, they would have no need to hide any details about it. In reality, most of them refuse to release much information about their training data, and are even fighting against simply disclosing this information.

Some of this is simply pragmatism: there is a huge difference between something being probably legally safe and definitely legally safe. Given using copyright material was essential to getting these models operational, and lawsuits were likely all but inevitable, it was obviously better for the AI companies to delay those legal battles as long as they could – so that they were fighting them when their technology is out in the world, and investors are throwing money at them.

Had the media, publishing and music industries noticed what was happening earlier, they would have had the deeper pockets – which can influence what happens in legal fights significantly. Licensing agreements may have been introduced much earlier, on more favourable terms to the media, just to avoid the delays and costs of protracted court battles. Instead, big tech now has the deeper pockets and the political connections to fight.

Crucially, too, just because something might well be allowable under copyright law doesn’t mean it’s either good or desirable. Big tech giants like Google had a grudging deal with the media: they got incredibly rich off the media’s work by being the intermediary between users and news outlets – but they did eventually send users to the media.

In the AI era, they are trying to keep the users where they are, even though they still need the media’s raw material to do that – AI can’t say what’s happening in the news today unless someone is out in the world finding it out.

In different ways and with different facts, every other creative industry is in a similar standoff. Their work is the foundation of a new subset of the tech industry that could be a multi-trillion dollar sector – and they might be left with only the scraps at the table.

Saying that copyright might not be the winning tactic for the media and creative industries is not the same as saying what’s happening is fair, reasonable or okay. It is instead just a matter of tactics and strategy – if existing legal protections are unfit for the unique challenges posed by AI models, then the media needs to lobby for new legal frameworks.

If copyright is a false source of hope for the media against AI, then if it delays or distracts from efforts to create new laws and regulations, that only helps big tech establish a new status quo – in which almost all of the profits from creative work flow to them.

Saying that copyright might not be what saves the media isn’t a counsel of despair. Instead, it’s a call to action.

Ingesting and digesting millions upon millions of copyrighted work as part of an effort to make artists, musicians and writers redundant certainly isn’t right, but there’s every chance the courts could find that as the law stands, it’s legal. The media needs to think bigger, and it needs to start fast.

Dune’s “Butlerian Jihad” against “thinking machines” is named for Samuel Butler

Thanks to Ian Mansfield for my tour and preview of some of these old texts, which are kept in their archive.

Thanks, Mark Twain

James, it sounds like I would disagree with you on the ethics of all this, but you’re making the argument here that I’ve struggled to make in a clear way. I learned a lot and I only wish all the people in comments sections screaming “AI IS THEFT!!!” will read this.

As to this: “That doesn’t change the fact that the AI companies have created a temporary copy of all of their training data...” I’ve long said busting AI companies on this point is like busting Al Capone for tax evasion. Maybe it’s a crime, but it’s not the crime everyone is screaming about. And as you point out, the Google case offers protections. That “[c]omplete unchanged copying has repeatedly been found justified...” clause is key.

On this note: “There is nothing stopping anyone from taking the facts from a news story and retelling them: they have no legal protection whatsoever.” Thus explaining the rise of articles that seem to be no more than a retelling of a NY Times or Washington Post piece with (perhaps) a little opinion thrown in.

As to this: “If AI companies were absolutely certain about the legal basis of what they were doing, they would have no need to hide any details about it.” Couldn’t it be that revealing the details would reveal information about the algorithms used/trade secrets, etc?

There’s one key point you don’t discuss - the use of pirated ebooks. Even if I can copy a book (temporarily for transformative purposes), I still need to pay for it, no? (I’ve seen some interesting arguments that say “maybe not” but they don’t feel open and shut.) Of course, if a court forced the AI companies to “buy” all the books in the pirated LLMs, and even added in a punitive fine, I doubt that would break the bank.

I'm not an IP lawyer, but I have had some training in commercial and IP law as an engineer, musician and writer. A few points:

1. Fair use is subject to a number of tests. One of the key ones is public utility. While on the surface of it, LLM's provide some measure of public utility, the fact that these services are monetising that utility diminishes the "public" nature of that utility.

2. Fair use is an exception in copyright laws - an edge case. It is not the core principle from which such laws are generally applied. On the other hand, CONSENT is very much a core principle. There'd be a strong equity argument that consent cannot be countermanded by fair use exceptions when performed on the wholesale scale with which AI companies have so flagrantly bypassed the concept of consent. In copyright law, there is no such thing as "Asking for forgiveness because asking for permission takes longer." OpenAI, Google and their ilk are trying to convince us that forgiveness is an available option when it's not.

3. In academic publishing, there is a principle of global consent to "fair use" in creating new works that contribute to the "state of art." However, there are clear demonstrations available which show that LLM generated academic papers have significantly muddied the "state of art" in multiple fields. Many journal publishers are having to heavily rework their processes for peer review after a massive influx of LLM-generated papers which are being used to cynically manipulate citation counts and support the employability of demonstrable charlatans. I think a "reasonable person" test would find that such usages would indeed NOT pass muster as fair use.

4. Public-adjacent domain licenses (e.g. GPL, LGPL, MIT license, Creative Commons licenses) are a special case that also needs consideration. They provide for open use of a given IP subject to strict terms around attribution, non-commercial use, etc. Simple scraping of works licensed via such models does not require fair use as the license is already permissive. I would argue that fair use becomes inapplicable in such cases. However, reproduction and/or creation of derivative works from said IP without adhering to the license terms would be a clear breach of the license terms, requiring redress. If AI vendors/model trainers have not considered this, they are probably in breach of copyright. They also risk the specific case extending to the general (i.e. breach of publishing licenses) if this specific argument is upheld.

5. This is a weird edge case, but bear with me. Let's say someone writes a book, paper, song, etc... that is so distinctive that there's only one instance of it that an LLM-like ingest process can generate weights for in a given category. The chances that those weights will reproduce something very similar to that work - to the point of duplication - might leave the AI vendors having to prove that substantial modification is built into their algorithms, even for singular/small sample sizes for a given domain/category. The burden of proof would most reasonably fall on them in this circumstance.

6. There's a legal precept called "The fruit of the poisoned tree," which will be a huge risk for AI vendors - and one that if they are smart, they will settle out of court on for their larger disputes. This is an argument that the IP holders/publishers should have a field day with. Especially if the LLM owners have ingested works that were DRMed, but got around that by finding rogue PDFs, unlicensed EPK/PUB files, etc... If they got around this by purchasing Journal subscriptions, etc... but the journal's access T&Cs did not give permission to scrape more than a specific amount of any given article, they are in breach of contract. That obviates fair use.

7. "I build a custom lawnmower for my lawnmowing business. My neighbour takes my lawnmower without my consent and charges my customers and others for lawnmowing services. The income my neighbour receives using my lawnmower is effectively the proceeds of an unlawful act, and said neighbour is not entitled to it, even though he pushed the lawnmower around the respective yards. Giving my lawnmower back to me does not entitle him to keep the illegally obtained income." Jury nullification, done.

I'd be very careful saying "The AI companies are probably in the clear." These principles would all be fair game for a Court of Equity argument, even if a common law argument for fair use was accepted under Common Law. While they AI vendors might get an initial pass, appeals and potential mistrial motions under these principles could get very expensive for them; to the extent that I wouldn't be in any rush to purchase AI stocks.